Prompt Images

Some technological breakthroughs happen as spectacle. This was true of the atomic bomb, the first of which was dropped in a desert in New Mexico at 5:30 A.M., the morning of July 16, 1945. Before that moment, the bomb was only hypothetical. The moment after the bomb detonated we had this:

Other breakthroughs proceed without receiving much attention. It’s only in hindsight that the rest of the world recognizes what those gathered around the lab bench had suspected all along: that this changes everything.

Consider the transistor.

The transistor technically first arrived in late 1947, courtesy of a group of researchers at Bell Labs. But it would be several decades before transistors would begin transforming the world—converting computers that previously filled factory floors into devices that fit on our desks, then in our hands, and ultimately on a 1-inch by 1-inch square of our wrists.

One of the differences between atomic bombs and transistors is that with the former, you either have an atomic bomb that works or you do not. That is, you either have a device that unlocks the energy within the very atoms that make up a material, or you have nothing. If you have such a thing, it’s nearly impossible to not make an unbelievably destructive explosion. Meanwhile, it’s possible to make a transistor that is qualitatively distinct from its predecessor (a vacuum tube—more on those later) but that doesn’t, in practice, move you much closer towards your goal of making faster and smaller computers.

Another difference is the technical nature of the breakthrough. Most people barely understand how electricity works. Announcing that some dorks at Bell Labs have invented a new kind of electrical “switch” is much less impressive to most people than showing them pictures of mushroom clouds. If the researchers at Bell Labs had announced their discovery of the transistor and, simultaneously, revealed the first iPhone to demonstrate its power, then we might all have appreciated exactly what they’d achieved.

This overly simplistic taxonomy of breakthroughs is relevant when we consider something that some other dorks at another giant corporate lab recently announced. An announcement that may not have made it to the front pages of Deadspin—err, I mean, The Verge. The company was none other than Google.

What was Google’s announcement?

That a team of engineers at the company have built a quantum computer that achieves something known as “quantum supremacy.”

If you find yourself at a loss to appreciate what this means, rest assured, you are not alone. That’s because this breakthrough is more transistor than atomic bomb. The world has not changed under your feet. But, it has changed.

In order to understand what quantum supremacy means, or even what a quantum computer is, we need to go back and start with something more basic:

What’s a computer?

Not the fancy new quantum kind. I’m talking about regular old computers (ROCs). The kind that you are using to read this very article.

A Very Brief History of Regular Old Computers (ROCs)

Once upon a time a “computer” was actually a person. For example, during World War II when some scientists or engineers needed to figure out some complicated calculation for where to drop a bomb, or to estimate how much energy might be unleashed by an atomic bomb, they’d build an assembly line of actual people (often women) to churn through the calculations. And I mean assembly line, because often one person only worked on one part of the calculation, then handed it off to another person who worked on the next part, and so on.

Eventually, like good capitalists, we figured out a way to automate those personal computers. Instead of using Sally to perform some piece of a giant calculation, scientists and engineers realized they could build little electrical devices to replace the work Sally was doing. They could then wire all those little electrical devices together into a gigantic machine to perform the entire calculation.

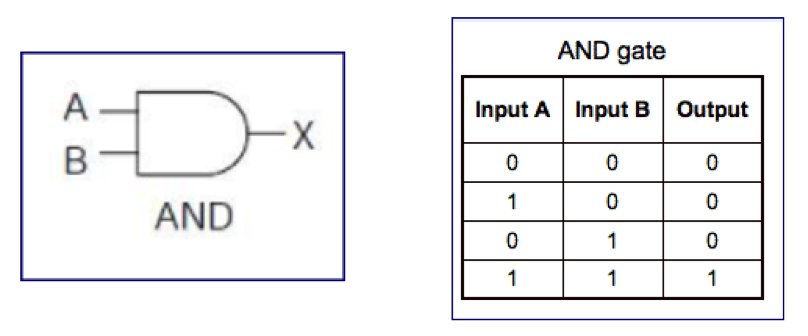

A major component of these computing machines was (and still is) something known as a logic gate.

Logic gates are things that take in a simple inputs and spit out simple outputs.

Here’s an example of one kind of logic gate known as an “AND” gate. The two inputs are A and B, and the output is X in the diagram on the left. The output for different possible inputs (where A and B could be either 0 or 1) is given in the chart on the right. It doesn’t matter if you understand how it works, only that you understand that a logic gate is a device that takes simple inputs and “computes” simple outputs.

Computers are just a collection of logic gates, more or less.

Give a computer an input (a calculation you want it to perform or a search query for “cute kittens”) and it “calculates” some stuff and then spits out an output (the answer to your calculation or a video of cute kittens). It’s obviously more complicated than this, but at a fundamental level it really is just logic gates taking in simple inputs and spitting out simple outputs, which are then fed to neighboring logic gates in a kind of convoluted telephone game.

In an electrical device, the logic gates are literally electric switches that allow electrons to flow through the gate and create an electrical voltage. The “values” of the inputs and outputs are represented physically by electric voltages. Zero may be represented by a lower voltage and 1 may be represented by a higher voltage.

The earliest computers used vacuum tubes to do the switching. Here are some vacuum tubes shown next to a banana so you can see the scale.

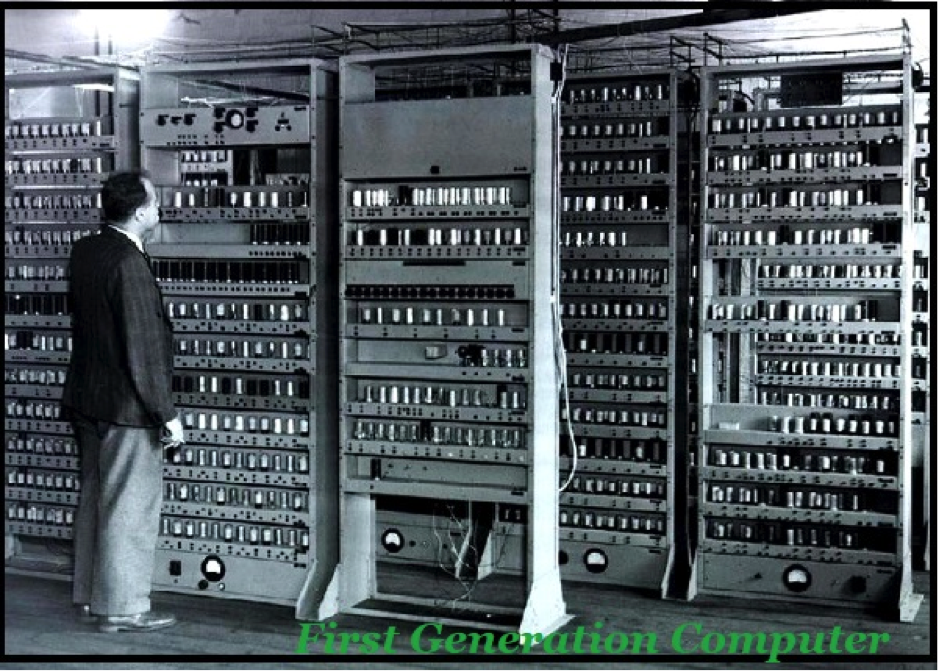

This is really cool for making “radios” that look old fashioned, but for a computer that needs a lot of switches, you end up with monsters like this:

However, once transistors were invented, everything changed.

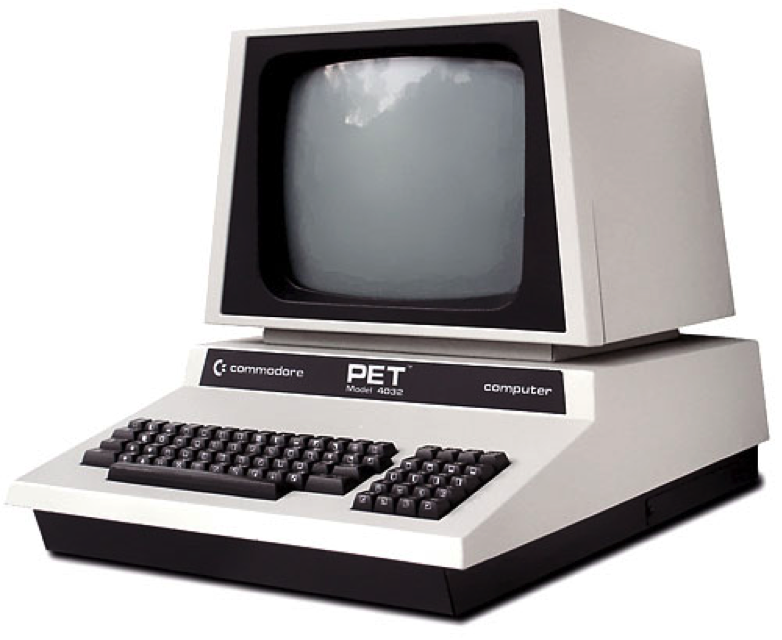

You can make transistors that are much, much smaller than even the smallest vacuum tubes. So within a few decades you go from the above Goliath to the PET computer, below, which we definitely owned in my house.

As time went on, computer geeks figured out ever more clever ways to shrink these devices and pack ever more computing power into them.

But, despite the digital revolution that was made possible first by the earliest incarnations of the computer, and then by the transistors that enabled us to fit computers into every pocket of our lives, the basic operations within a computer haven’t really changed.

It’s all just logic gates that manipulate zeros and ones.

Around the time that IBM and co. were launching a revolution in regular old computers, some physicists—who had spent the decades since the war exploring all sorts of weird facets of the new “quantum physics”—realized that nature might provide a way for us to build a different kind of computer. A quantum computer.

So what is a quantum computer and how is different from a classical computer?

Recall that classical computers are snazzy devices to manipulate 0’s and 1’s. No matter what is happening inside the computer, at any given logical gate inside the inputs and outputs are all either 0’s or 1’s. Now computer scientists refer to these fundamental building blocks of computing as bits. A bit is a logical value, which can either be a 0 or a 1. As described above, each bit in a regular old computer is physically represented as a voltage: a lower voltage for 0 and a higher voltage for 1. (At least this is how they are represented when they are being used in logic gates—they may be stored in memory in other ways).

But quantum computers don’t run on bits, they run on qubits.

Qubits aren’t represented in a quantum computer by voltages. They are represented by different quantum states of things like atoms. If you know anything about quantum mechanics, you know that things like atoms behave in really weird ways. Thus, it shouldn’t be surprising to learn that qubits behave in weird ways.

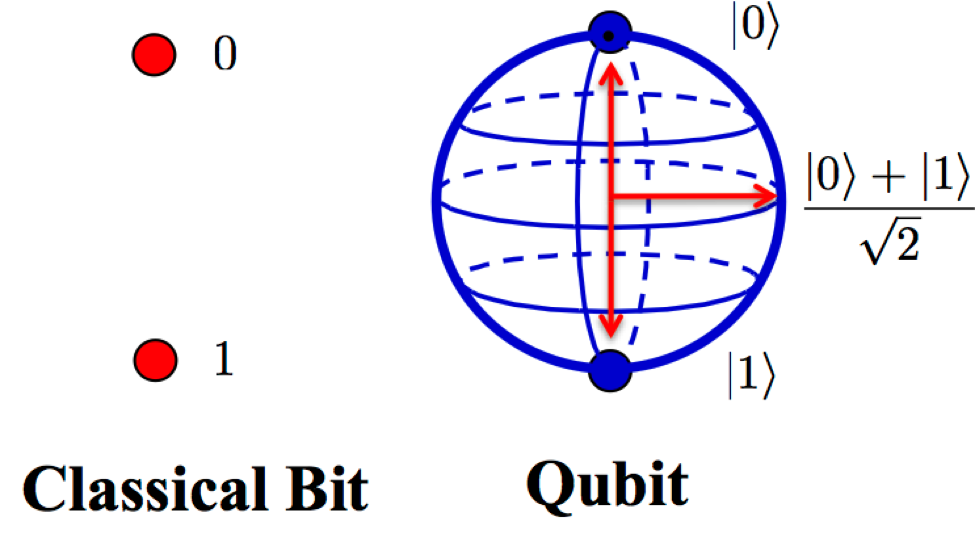

In this case, qubits can be in a state representing 0 or a state representing 1, like a classical bit. But they can also be in a state that is “partly 0 and partly 1.” I don’t mean like halfway between 0 and 1. I mean a “combination” of 0 and 1 that is impossible to understand intuitively but which physicists can “understand” just fine using elaborate mathematics.

Here’s a picture comparing classical bits to qubits. The point of showing you this image is NOT to help clarify qubits for you. It’s to hammer home the idea that qubits are fundamentally weird things that are basically impossible to show in an intuitive way. If you want to think of qubits as “magic,” be my guest. So long as you appreciate that physicists know how to predict how these magic bits will behave.

The upshot is that you can build new kinds of logic gates to manipulate qubits and perform certain kinds of calculations that you cannot do efficiently with a regular old computer.

The “efficiently” qualifier here is key.

You could always use a regular old computer to sort of “simulate” what the quantum computer is doing, but it will often take more time than we have left in the universe so the quantum computer provides a real practical advantage. The “certain” kinds of calculations is also important to keep in mind. Quantum computers may only provide an advantage in solving some kinds of problems. There may be many problems that can be solved just as efficiently using regular old computers.

Of course, this is all just theory. The idea of a computer was first conceived long before a working computer was ever built. Likewise, physicists conceived of quantum computers in the 1960s and 1970s, but only recently have there been devices that experts would be willing to even consider calling a “quantum computer.”

And along the way there have been critics claiming that while quantum computing is possible in theory, there are too many practical problems with implementing one so that for all intents and purposes, they are impossible. Much like it is—in theory—possible to build a tower from here to the moon, from an engineering standpoint, it’s a non-starter.

The Age of Quantum Supremacy

And this is where we stood up until just a few weeks ago, when Google made their big announcement. They claimed they had achieved something known as “quantum supremacy” with a quantum computer.

To appreciate what quantum supremacy means, consider the problem with comparing quantum computers with their classical counterparts. Clearly, the more computational power you can throw at a problem, the faster you can solve it. It may not be feasible for your desktop to perform some intensive calculation, but maybe the NSA has a super computer that can do it fairly easily.

But if some classical computers are much more powerful than others, how do we compare any of them to quantum computers?

Maybe there’s a quantum computer that is more powerful than your Tomagachi, but does it matter if the world’s best supercomputers still blow it away in any problem that matters?

This is why quantum computing researchers came up with the idea of “quantum supremacy.”

Because “quantum computers are better than classical computers” is too vague to be of any use, especially in an era dominated by classical computers. In a nutshell, the idea of quantum supremacy is a phase in the evolution of computing when there are quantum computers that can perform some calculation much more efficiently (i.e., quickly) than all existing classical computers.

And this is what the Google team did.

They built a computer with 54 qubits (called Sycamore) that could perform a very specific—and honestly currently useless—calculation much, much more efficiently than the best supercomputers in the world. Specifically, they compared their results of said calculation with how long they estimated the same calculation would take on Summit, a supercomputer owned by IBM that is as big as two basketball courts.

The difference in the amount of time required to solve this same problem on the two different systems?

200 seconds on Google’s quantum computer

Vs.

(an estimated) 10,000 years on IBM’s classical computer.

Google’s computer crushes IBM’s computer, right?

But before we get too excited, soon after Google announced its results, IBM came back and claimed that it’s supercomputer could actually do this calculation in just 2.5 days. That may seem a lot less impressive. But considering that one computer has just 54 qubits and IBM’s computer is like the biggest baddest supercomputer the world has ever known, it’s still pretty impressive that Google’s computer is, more or less, 2.5 days faster.

And this difference will grow substantially as you add more qubits to the quantum computer.

Thus, despite IBM’s attempt to rain on Google’s parade, many of the experts think that Google’s claim is still well-founded. It’s a quantum computer that can do something better than any classical computer, even the two-basketball-court-sized supercomputer, and will likely continue to separate itself from the world’s classical computers over time.

So How Big a Deal Is This?

This is a big deal, folks. Yuge. It isn’t going to change anything about your life tomorrow. It may not change anything about your life for a couple more decades. But remember, this is the transistor, not the A-bomb. Quantum computers were never going to change the world overnight. Nor, you may recall, did the internet, or the first wave of personal computers.

Still, we’ve witnessed a phase change in the world of computing. One that will very likely compound over time.

You can be sure the NSA is currently busy devising new ways of encrypting information, since one application of quantum computers is breaking most known encryption schemes pretty easily. And computer manufacturers are dreaming of the revenues from quantum computers on every desktop, and eventually, in every hand.