Prompt Images

As you may know, there is currently a debate about whether we are at risk of being unemployed/destroyed/alloyed by robots in the near future. Take a specific example, a piece published last year claiming that the automated revolution is nigh. I’m sympathetic to the claims made in this piece—because I wrote it. It sure does feel like artificial intelligence is accelerating at a breakneck pace.

Here’s a list of the many impressive things that AI can do right now:

- Detect faces better than people

- Understand spoken words, translate words and say words back to you with really impressive accuracy

- Read those stupid “captchas” that were designed to be unreadable by machines

- Drive

- Literally put words in Obama’s mouth (you should click through to watch one of the most disturbing uses of AI to date)

These are all very recent achievements. Do we have any reason to think it won’t keep accelerating until Homo terminatus becomes the dominant species on our planet?

Apparently archeologists of the future will be able to identify old broken down Terminator models using dental records.

In fact, we do. Because although these particular capabilities are very new, the ideas underlying these capabilities are not so new. They are actually older than some of the folks reading this piece right now.

In his piece, “Is AI Riding a One Trick Pony?”, published in the MIT Technology Review, James Somers questions the accepted wisdom that we are at the beginning of a new revolution in artificial intelligence. Maybe, he suggests, we are actually at the end of one.

Somers piece is part history, part profile and centers on one man and a singular idea. The man is AI researcher Geoffrey Hinton. The idea itself is a bit technical—it’s an algorithm in artificial intelligence referred to as backpropagation.

What is backpropagation and why should we care?

Well, as Somers writes, “When you boil it down, AI today is deep learning, and deep learning is backprop—which is amazing, considering that backprop is more than 30 years old.”

Here’s what Somers means: artificial intelligence is a really big field encompassing a ton of different ideas and techniques that have evolved over almost a hundred years of research. But standing in 2017 you could be forgiven for ignoring 99 percent of these different ideas and techniques. Because right here, right now, the cutting-edgiest parts of AI are powered by one particular algorithm: deep neural networks. For our purposes you can just think of deep neural networks as black boxes that can be “trained” to learn things, like how to identify a cow in pictures, or translate between French and Urdu.

Neural networks are themselves pretty old, dating back to the 1940s. But they were too unwieldy to run on any computers of the time.

This computer filled a large room and probably had less computing power than your old Tamagotchi pet.

In 1986, Hinton discovered a clever new way to train neural networks—backpropagation—that made using neural networks feasible for the first time. “Backprop” is the digital lubricant that allows the computationally intensive neural networks to run without frying your CPU.

The Gamechanger

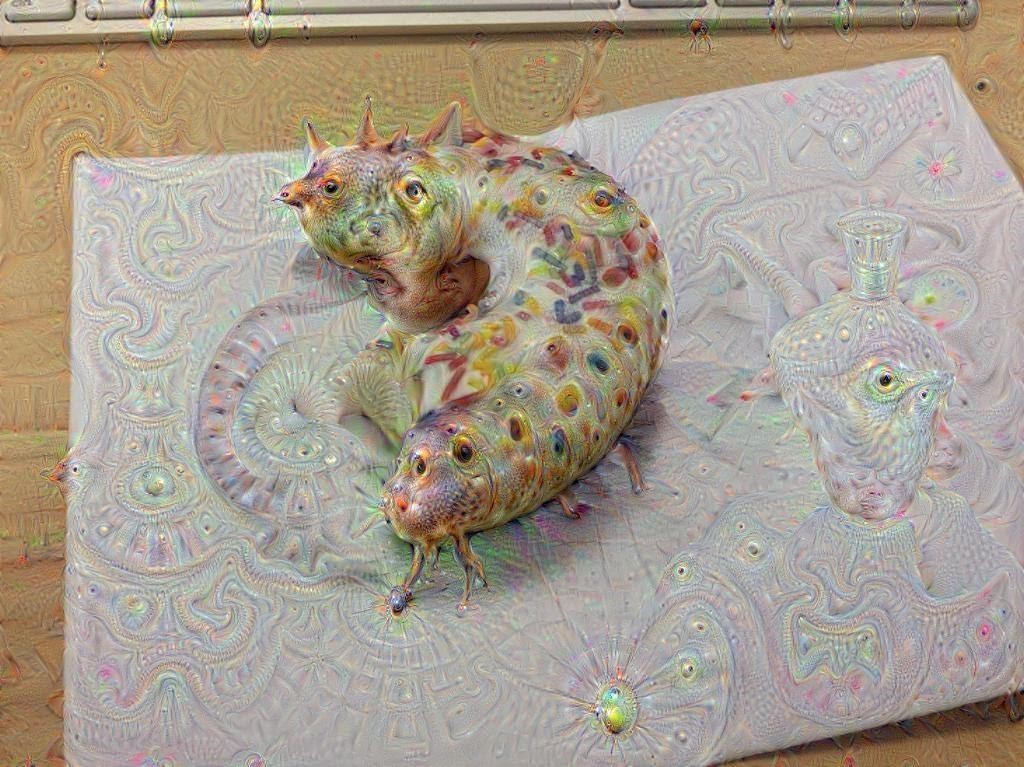

Over time, as computers have become more powerful and data more readily available, neural networks have gone from “neato” to “gamechanger.” Before backprop powered deep neural networks, this computer generated image of a donut-caterpillar hybrid would have been impossible:

This was generated by Google’s “Deep Dream” program. I won’t be able to eat donuts for at least a day.

Scientists and engineers have also used deep neural networks to do practical things like drastically improve automated image and speech recognition. This renaissance of neural networks also led to a hiring frenzy by Google, Facebook, and many others to snatch up Geoffrey Hinton and a handful of his collaborators who’d all spent their careers in a “backwater” of AI research. Each suitor promised to bestow upon them a commercial research department stocked with armies of computer scientists and seven figure salaries.

This all happened within the last 5 years. It all suggests that the latest developments in AI are just hitting their stride.

So why isn’t Somers convinced?

We tend to get swept up in the potential for radical change when new technologies come along. We build in our minds an entirely new future, forgetting that Rome was not built in a day, nor on a single idea.

If you were to ride on an airplane from the 1950s versus now, I’m guessing it might feel pretty similar. We had, for a brief moment, the promise of supersonic flight, but that never actually stuck.

Then there was the space race—in 1969 we landed a man on the moon. We did it a few more times in ‘71 and ‘72. But since then? No more manned moon landings. No manned landings on Mars. We’ve had some probes land a few places in the solar system, but mostly we have spent decades launching more and more shit into earth’s orbit—the thing that we could already do with pre-1969 rocket technology.

Remember when the iPhone was launched and we were all like, holy shit, gamechanger? And it was. But doesn’t it feel like most of the game-changiness happened during those first couple years of waiting in lines with all the other Apple fanboys? Can anyone even say what’s substantively different between the iPhone 6 and iPhone 7 without looking at the spec sheet on Apple’s website?

In the field of AI, this phenomenon happens so often that they have a term for it: AI Winter—a period of reduced expectations that occurs when the latest “AI Hype” bubble breaks and people realize (again) that we are still quite far from machines that think.

Currently, backpropagation is the secret sauce in everything from your favorite digital assistant to the Facebook algorithm that knows everything about you from a few dozen clicks. It’s an impressive record, but surely this one single idea will only take AI so far.

Singular ideas are brittle. Once upon a time some single celled organism evolved to “sense light.” It was a clever trick, but the world would have to wait another few billion years, and for a few more (r)evolutionary ideas to get the Homo sapien. A species so fiendishly intelligent that we’ve figured out how to annihilate an entire planet using a single message of no more than 140 characters.

At some point, AI will likely need another “big idea” if we are ever to leapfrog closer to a world where machines take our jobs and our hearts. More realistically, it will need a lot of big ideas.

As to what those ideas will be, and when they will arrive?

You can try asking Alexa, but I doubt she knows anymore than we do.